Yesterday, I got some alerts for some nodes in the CentOS Infra from both our monitoring system, but also confirmed by some folks reporting errors directly in our #centos-devel irc channel on Freenode.

The impacted nodes were the nodes we use for mirrorlist service. For people not knowing what they are used for, here is a quick overview of what happens when you run "yum update" on your CentOS node :

- yum analyzes the .repo files contained under /etc/yum.repos.d/

- for CentOS repositories, it knows that it has to use a list of mirrors provided by a server hosted within the centos infra (mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates&infra=$infra )

- yum then contacts one of the server behind "mirrorlist.centos.org" (we have 4 nodes so far : two in Europe and two in USA, all available over IPv4 and IPv6)

- mirrorlist checks the src ip and sends back a list of current/up2date mirrors in the country (some GeoIP checks are done)

- yum then opens connection to those validated mirrors

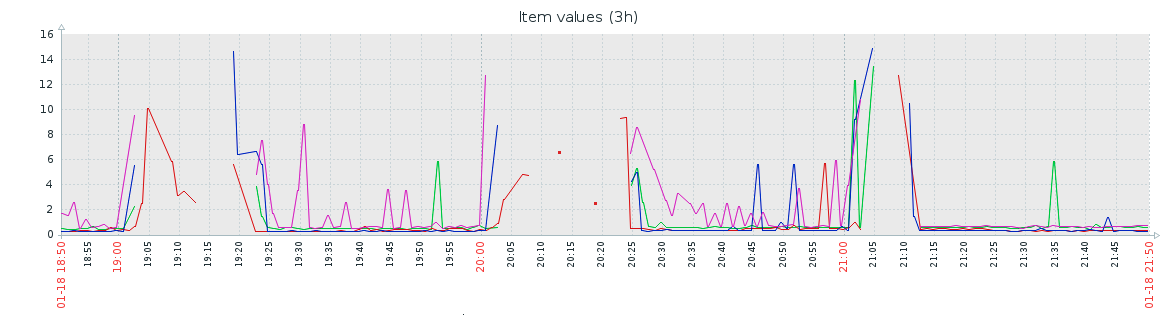

We monitor the response time for those services, and average response time is usually < 1sec (with some exceptions, mostly due to network latency also for nodes in other continents). But yesterday the values where not only higher, but also even completely missing from our monitoring system, so no data received. Here is a graph from our monitoring/Zabbix server :

So clearly something was happening and time to also find some patterns.

Also from our monitoring we discovered that the number of tracked network connections by the kernel was also suddenly higher than usual. In fact, as soon as your node does some state tracking with netfilter (like for example -m state ESTABLISHED,RELATED ), it keeps that in memory. You can easily retrive number of actively tracked connections like this :

cat /proc/sys/net/netfilter/nf_conntrack_count

So it's easy to guess what happens if the max (/proc/sys/net/netfilter/nf_conntrack_max) is reached : kernel drops packets (from dmesg):

nf_conntrack: table full, dropping packet

Depending on the available memory, you can get default values, which can be changed in real-time. Don't forget to also tune then the Hash size (basic rule is nf_conntrack_max / 4) On the mirrorlist nodes, we had default values of 262144 (so yeah, keeping track of that amount of connections in memory), so to get quickly the service in shape :

new_number="524288"

echo ${new_number} > /proc/sys/net/netfilter/nf_conntrack_max

echo $(( $new_number / 4 )) > /sys/module/nf_conntrack/parameters/hashsize

Other option was also to flush the table (you can do that with conntrack -F , tool from conntrack-tools package) but it's really only a temporary fix, and that will not help you getting the needed info for proper troubleshooting (see below)

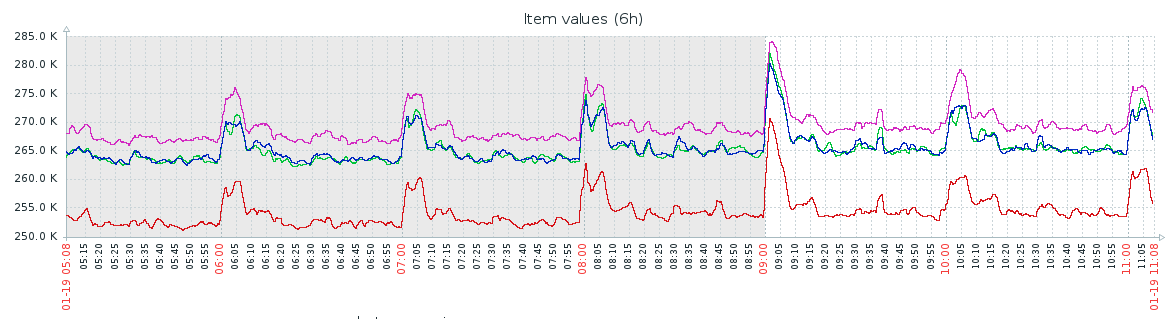

Here is the Zabbix graph showing that for some nodes it was higher than default values, but now kernel wasn't dropping packets.

We could then confirm that service was then working fine (not "flapping" anymore).

So one can think that it was the only solution for the problem and stop investigation there. But what is the root cause of this ? What happened that opened so many (unclosed) connections to those mirrorlist nodes ? Let's dive into nf_conntrack table again !

Not only you have the number of tracked connections (through /proc/sys/net/netfilter/nf_conntrack_count) but also the whole details about those. So let's dump that into a file for full analysis and try to find a pattern :

cat /proc/net/nf_conntrack > conntrack.list

cat conntrack.list |awk '{print $7}'|sed 's/src=//g'|sort|uniq -c|sort -n -r|head

Here we go : same range of IPs on all our mirrorlist servers having thousands of ESTABLISHED connection. Not going to give you all details about this (goal of this blog post isn't "finger pointing"), but we suddenly identified the issue. So we took contact with network team behind those identified IPs to report that behaviour, still to be tracked, but wondering myself if a Firewall doing NAT wasn't closing tcp connections at all, more to come.

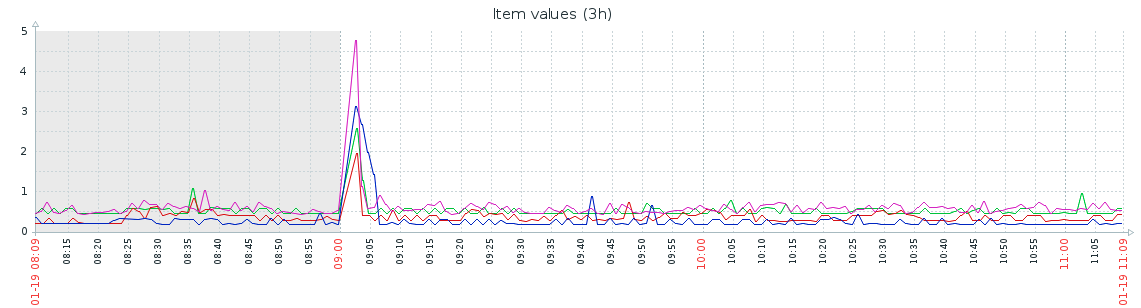

At least mirrorlist response time is now back at usual state :

So you can also let your configuration management now set those parameters through dedicated .conf under /etc/systctl.d/ to ensure that they'll be applied automatically.