It's not a secret anymore that I use Ansible to do a lot of things. That goes from simple "one shot" actions with ansible on multiple nodes to "configuration management and deployment tasks" with ansible-playbook. One of the thing I also really like with Ansible is the fact that it's also a great orchestration tool.

For example, in some WSOA flows you can have a bunch of servers behind load balancer nodes. When you want to put a backend node/web server node in maintenance mode (to change configuration/update package/update app/whatever), you just "remove" that node from the production flow, do what you need to do, verify it's up again and put that node back in production. The principle of "rolling updates" is then interesting as you still have 24/7 flows in production.

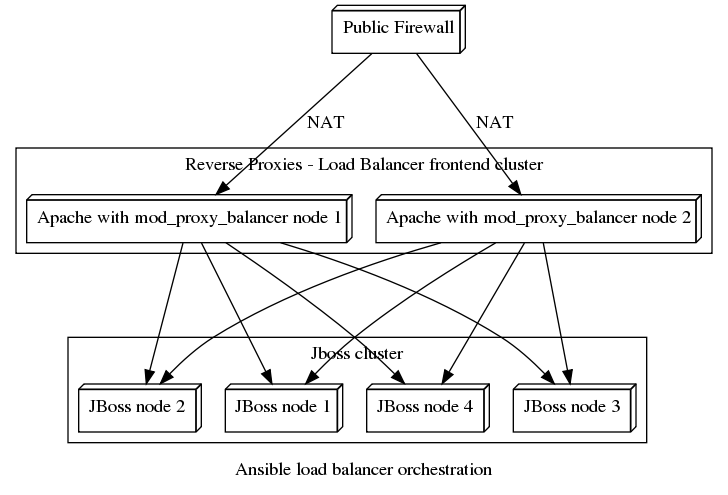

But what if you're not in charge of the whole infrastructure ? AKA for example you're in charge of some servers, but not the load balancers in front of your infrastructure. Let's consider the following situation, and how we'll use ansible to still disable/enable a backend server behind Apache reverse proxies.

So here is the (simplified) situation : two Apache reverse proxies (using the mod_proxy_balancer module) are used to load balance traffic to four backend nodes (Jboss in our simplified case). We can't directly touch those upstream Apache nodes, but we can still interact on them , thanks to the fact that "balancer manager support" is active (and protected !)

Let's have a look at a (simplified) ansible inventory file :

[jboss-cluster]

jboss-1

jboss-2

jboss-3

jboss-4

[apache-group-1]

apache-node-1

apache-node-2

Let's now create a generic (write once/use it many) task to disable a backend node from apache ! :

---

##############################################################################

#

# This task can be included in a playbook to pause a backend node

# being load balanced by Apache Reverse Proxies

# Two variables need to be defined :

# - ${apache_rp_backend_url} : the URL of the backend server, as known by Apache server

# - ${apache_rp_backend_cluster} : the name of the cluster as defined on the Apache RP (the group the node is member of) (internalasync)

# - ${apache_rp_group} : the name of the group declared in hosts.cfg containing Apache Reverse Proxies

# - ${apache_rp_user}: the username used to authenticate against the Apache balancer-manager (clusteradmin)

# - ${apache_rp_password}: the password used to authenticate against the Apache balancer-manager (5added592b)

# - ${apache_rp_balancer_manager_uri}: the URI where to find the balancer-manager Apache mod

#

##############################################################################

- name: Disabling the worker in Apache Reverse Proxies

local_action: shell /usr/bin/curl -k --user ${apache_rp_user}:${apache_rp_password} "https://${item}/${apache_rp_balancer_manager_uri}?b=${apache_rp_backend_cluster}&w=${apache_rp_backend_url}&nonce=$(curl -k --user ${apache_rp_user}:${apache_rp_password} https://${item}/${apache_rp_balancer_manager_uri} |grep nonce|tail -n 1|cut -f 3 -d '&'|cut -f 2 -d '='|cut -f 1 -d '"')&dw=Disable"

with_items: ${groups.${apache_rp_group}}

- name: Waiting 20 seconds to be sure no traffic is being sent anymore to that worker backend node

pause: seconds=20

The interesting bit is the with_items one : it will use the apache_rp_group variable to know which apache servers are used upstream (assuming you can have multiple nodes/clusters) and will play that command for every host in the list obtained from the inventory !

We can now, in the "rolling-updates" playbook, just call the previous tasks (assuming we saved it as ../tasks/apache-disable-worker.yml) :

---

- hosts: jboss-cluster

serial: 1

user: root

tasks:

- include: ../tasks/apache-disable-worker.yml

- etc/etc ...

- wait_for: port=8443 state=started

- include: ../tasks/apache-enable-worker.yml

But Wait ! As you've seen, we still need to declare some variables : let's do that in the inventory, under group_vars and host_vars !

group_vars/jboss-cluster :

# Apache reverse proxies settins

apache_rp_group: apache-group-1

apache_rp_user: my-admin-account

apache_rp_password: my-beautiful-pass

apache_rp_balancer_manager_uri:

balancer-manager-hidden-and-redirected

host_vars/jboss-1 :

apache_rp_backend_url : 'https://jboss1.myinternal.domain.org:8443'

apache_rp_backend_cluster : nameofmyclusterdefinedinapache

Now when we'll use that playbook, we'll have a local action that will interact with the balancer manager to disable that backend node while we do maintainance.

I let you imagine (and create) a ../tasks/apache-enable-worker.yml file to enable it (which you'll call at the end of your playbook).